分类问题中的评估指标

Term

- True Positives (TP): should be TRUE, you predicted TRUE

- False Positives (FP): should be FALSE, you predicted TRUE

- True Negative (TN): should be FALSE, you predicted FALSE

- False Negatives (FN): should be TRUE, you predicted FALSE

个人看来,TP和TN都很好理解,二者反映了模型对于预测的准确性。FP和FN有点容易记不太顺,所以使用一个例子就很好去区分:

张三去看病,是否得了癌症, FP=>误诊, FN=>没查出来。

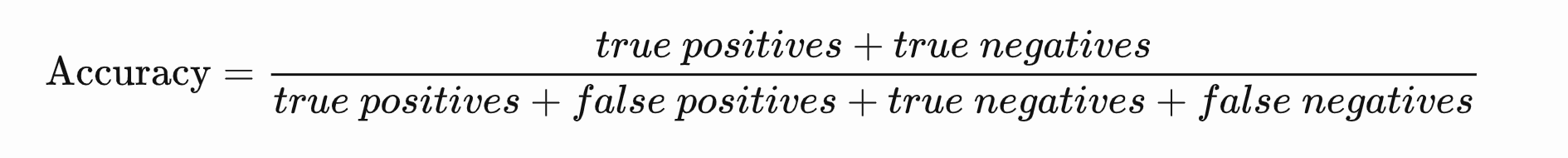

Accuracy - 准确率

What percentage of my predictions are correct? 模型预测的正确性

- Good for single label, binary classifcation.

- Not good for imbalanced datasets. 受数据不均衡影响很大

If, in the dataset, 99% of samples are TRUE and you blindly predict TRUE for everything, you’ll have 0.99 accuracy, but you haven’t actually learned anything.

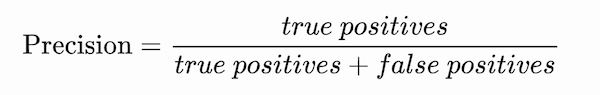

Precision - 查准率

Of the points that I predicted TRUE, how many are actually TRUE? 模型预测的正例中。真实正例的比例

- Good for multi-label / multi-class classification and information retrieval

- Good for unbalanced datasets

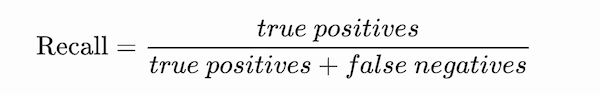

Recall - 查全率

Of all the points that are actually TRUE, how many did I correctly predict 真实正例中,模型预测为正的比例

- Good for multi-label / multi-class classification and information retrieval

- Good for unbalanced datasets

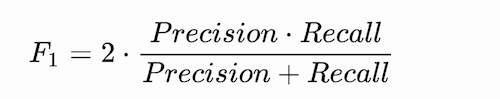

F1

Can you give me a single metric that balances precision and recall? F1-score (alternatively, F1-Measure), is a mixed metric that takes into account both Precision and Recall. 将查全与查准都考虑进去的F1

- Gives equal weight to precision and recall

- Good for unbalanced datasets

AUC (Area under ROC Curve)

Is my model better than just random guessing?

…待定。。。

本博客所有文章除特别声明外,均采用 CC BY-SA 4.0 协议 ,转载请注明出处!